Submitting this one for @basvanditzhuijzen

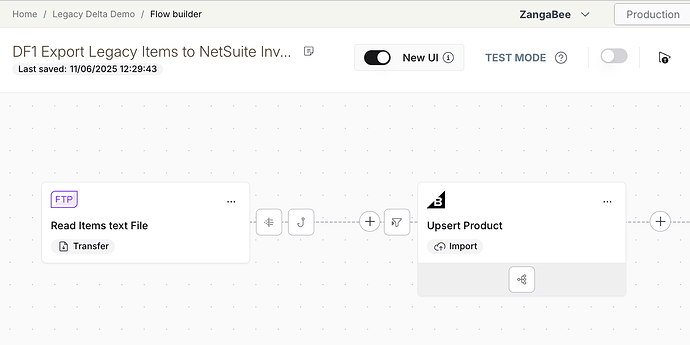

For an integration we made with a legacy WMS system, the only way to export product data was to get a nightly export of all products in the database.

This export was very large (thousands of lines), and we could not send all items to the target system as this would cause us to go over the API rate limits.

As an added difficulty, the export-file was formatted as a flat fixed width csv-file.

Leveraging Celigo’s new Lookup cache and scripting, we can build a flow in Celigo that checks if the record has changed between the previous run and now, turning our flow into a 'Delta' flow, that only processes records that have changed.

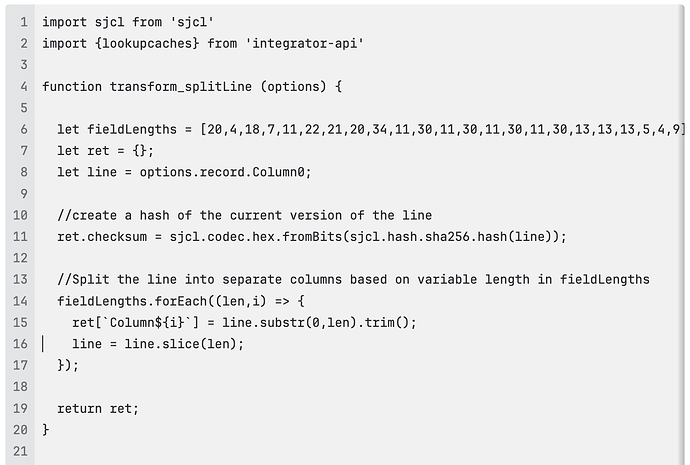

Splitting the single line into separate fields and generating a checksum

The export loads the txt file from an ftp server, as a csv file with pipe-delimited fields. This creates a single column of data per line.

Using the following script as a transform script-hook we create a checksum of the incoming item line, and split the string into separate columns:

If your source data is already split into columns you can directly create a checksum of the options.record object.

The Result:

Comparing current checksum with cached version

The check for changes happens on the Presave script on the export:

For each of the item records in the current page of data, we load the last-known checksum from the lookup cache.

Next we compare the checksum of the current record with the checksum that is in the cache. If it is different, we know the record has been changed and can send it on to the target system. We do this by setting the 'changed' boolean value, that is used as a filter in the import.

Any changed checksums are then written back into the lookup cache, to compare the next run.

Input filter to check if we need to update in BigCommerce:

Notes:

- Because we call the lookupcache-api outside of any loops, we only need to call it once per page of data instead of for every record. This improves performance and speed of the integration. Just make sure that the page-size of your export is smaller than 1000, the pagesize of the lookupcache api, so we only need to do one call to the cache-api.

- If you do not have access to lookup caches you can also use an external database for this, or save the checksum in your target system.

- the lookup cache will need to be registered to your integration by using it in a mapping in a 'dummy' flow .