I am looking for some advice on the best way to export receipt data (header plus multiple detail rows) from the MYOB Acumatica API into a legacy application SQL database with header and detail transaction tables. My immediate thinking was to use an intermediate staging table where I flatten the data and then have a subsequent step to run a stored procedure that populates the data into the appropriate transactional tables in the database and apply any error checking/handling there to capture exceptions. However, I was wondering if there was a smarter way to pass the array using a sql table variable as a parameter to the stored procedure and process each receipt header & footer that way. Any advice would be welcome or other alternative approaches to importing header/detail receipt transaction into a SQL database.

It sounds like you're about on the right track. There are a couple patterns you could do here:

- Use the

Use SQL query once per page of recordsor theUse SQL query once per recordoption on your import to pass data to a stored procedure where it handles everything. - Have one flow that exports all of your data and uses the

Use bulk insert SQL query (recommended)option to load all if to a staging table. After that, use theNext integration flowoption in the flow settings to run a second flow after this first flow finishes. The second flow would have a simple export that just exports a single record (by doing select 1 or count(*) from table). Then the import on that second flow triggers a stored procedure or runs a merge/insert/update command. - If you want a complex pattern to put it all on 1 flow, you could also do this, where you have an import to batch insert to staging, then another import that runs after everything is loaded.

Thanks Tyler for the prompt response! It looks like my options are somewhat limited as the client is using an on premise version of Microsoft SQL server so the Bulk Insert option is not available as I believe that requires a cloud instance of SQL. I also can’t find the option for “Use SQL query once per record” so is that also restricted to a cloud instance of SQL too?

What options do you have?

That's basically equivalent to the “Use SQL query once per record” option. I'm not 100% certain, but I think you can put statements in there that aren't INSERT and UPDATE even though the field label says it's for INSERT or UPDATE. Can you try putting your stored proc call in the INSERT query?

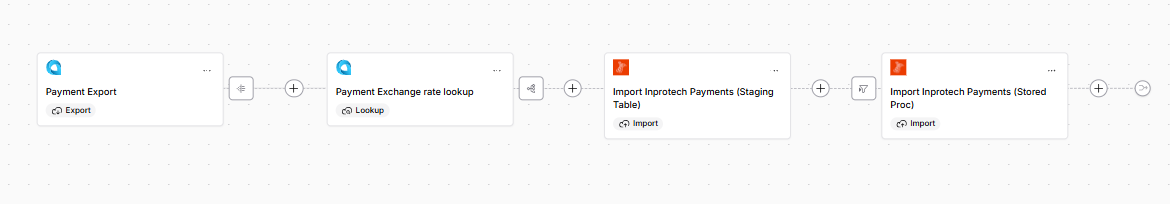

You are quite right. This screen shot was from my first step that looks to populate the staging table. The next step then calls the stored procedure. So my approach will be to add the detail rows to the staging table in the first step and then execute the stored procedure with a single row for the header that is passed to the stored proc and will run some validations and then create the transaction header in the database following be processing the details rows from the staging table. I think that should achieve what I need and also allow the transaction to rollback and report and error should any validation errors be encountered by the stored proc.

I will let you know if I hit any snags. Thanks again for your prompt advice, much appreciated.

Hi @tylerlamparter I was able to get the flow operational but was hoping to improve the logic by including a unique identifier for the current flow so that this could be saved as a column in the staging table. So I use handlebars to derive the JobID which all works well until I then try to pass the same JobID into the stored procedure in the subsequent step to help identify the rows to process from the latest execution of the flow. It looks like each step is allocated a different JobID. Is there something I can use that will allow me to identify the specific execution of the flow and pass this between the 2 steps? At the moment I have a workaround to clear down any duplicated data as a prior step but would ideally like to retain the staging data as a further audit trail for problem diagnostics. Any suggestions appreciated.

Use {{{job.parentJob._id}}}. All flow steps get their own child job (which is the ID you're referencing), and all flow runs get the parent job ID, which would be the same between flow steps for that run.

Thanks again Tyler, worked like a charm! I did look at the parentJob ID but thought it was the ID from the previous step so I was comparing it to the JobID of the prior step. Using the parent ID in both steps was what I missed.